Tutorial: Creating AWS Lambda with Terraform

- JP

- Mar 28, 2023

- 6 min read

Updated: Apr 9, 2023

For this post, we're going to create an AWS Lambda with Terraform and Java as runtime. But first of all, have you heard about Lambda? I recommend seeing this post about Lambda. And about Terraform? There's another post that I can show you the first steps using Terraform, just click here.

The idea about this post is to create an AWS Lambda that will triggered by CloudWatch Events through an automated schedule using a cron or rate expressions.

Usually we can create any AWS resource using the console but here, we're going to use Terraform as an IAC (Infrastructure as code) tool that will create any necessary resource to run our AWS Lambda. As runtime, we choose Java. So it's important that you understand about maven at least.

Remember you can run Lambda using different languages as runtime such as Java, Python, .NET, Node.js and more. Even if it's a Java project, the most important part of this post is try to understand about Lambda and how can you provisioning through Terraform.

Intro

Terraform will be responsible to create all resources for this post such as Lambda, roles, policies, CloudWatch Events and S3 Bucket where we're going to keep the JAR file from our application.

Our Lambda will be invoked by CloudWatch Events every 3 minutes running a simple Java method that prints a message. This is going to be a simple example that you can reuse in your projects.

You can note in the image above we're using S3 to stored our deployment package, JAR file in this case. It's an AWS recommendation to upload larger deployment packages directly to S3 instead maintaining on Lambda itself. S3 has better support for uploading large files without worry with storage. Don't worry to upload files manually, Terraform also will be responsible to do that during the build phase.

Creating the project

For this post we're going to use Java as language and Maven as a dependency manager. Therefore is necessary to generate a Maven project that will create our project structure.

If you don't know how to generate a Maven project, I recommend seeing this post where I show how to generate it.

Project structure

After generating the Maven project, we're going to create the same files and packages on the side, except pom.xml that was created by the maven generator.

It's a characteristic of Maven projects to generate these folders structure as shown src/main/java/.

Within java/ folder, create a package called coffee.tips.lambda and create a Java class named Handler.java within this same package.

Updating pom.xml

For this post, add the following dependencies and build below.

Creating a Handler

A handler is basically the Lambda controller. Lambda always look for a handler to start its process, to summarize, it's the first code to be invoked.

For the handler below, we created a basic handler just to log messages when invoked by CloudWatch Events. Note that we implemented RequestHandler interface allowing receiving as parameter a Map<String, Object> object. But for this example we won´t explore data from this parameter.

Understanding Terraform files

Now we're going to understand how the resources will be created using Terraform.

vars.tf

vars.tf file is where we declare the variables. Variables provides flexibility when we need to work with different resources.

vars.tfvars

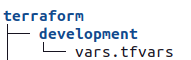

Now we need to set the values of these variables. So, let's create a folder called /development inside the terraform folder.

After folder creation. Create a file called vars.tfvars like side image and paste the content below.

Note the for bucket field you must specify the name of your own bucket. Bucket's name must be unique.

main.tf

To this file we just declare the provider. Provider is the cloud service where we're going to use to create our resources. In this case, we're using AWS as provider and Terraform will download the necessary packages to create the resources.

Note that for region field, we're using var keyword to get the region value already declared in vars.tfvars file.

s3.tf

This file is where we're declaring resources related to S3. In this case, we only created S3 bucket. But if you want to create more resources related to S3 such as policies, roles, S3 notifications and etc, you can declare here. It's a way to separate by resource.

Note again, we're using var keyword to bucket variable declared in vars.tf file.

lambda.tf

Finally our last terraform file, in this file we're declaring resources related to the Lambda and the Lambda itself.

Now I think it's worth explaining some details about the above file. So, let's do this.

1. We declared 2 aws_iam_policy_document data sources that describes what actions the resources that will be assigned to these policies can perform.

2. aws_iam_role resource that provides IAM role and will control some Lambda's actions.

3. aws_iam_role_policy that provides IAM role inline policy and will register the previous role and policies related to the aws_iam_policy_document.aws_iam_policy_coffee_tips_aws_lambda_iam_policy_document.

4. We declared aws_s3_object resource because we want to store our jar file on S3. So, during the deploy phase, Terraform will get the jar file that will be created on target folder and uploading to S3.

depends_on: Terraform must create this resource before the current.

bucket: It's the bucket's name where will store the jar file.

key: jar's name.

source: source file's location

etag: triggers updates when the value changes

5. aws_lambda_function is the resource responsible to create Lambda and we need to fill some fields such as:

function_name: Lambda's name.

role: Lambda role declared in previous steps that provides access to AWS services and resources.

handler: In this field you need to pass main class directory.

source_code_hash: This field is responsible to trigger lambda updates.

s3_bucket: It's the bucket's name where will store the jar file generated during deploy.

s3_key: Jar's name.

runtime: Here you can pass the Lambda supported programming languages. For this example, java11.

timeout: Lambda's timeout of execution.

6. aws_cloudwatch_event_rule is the rule related to the CloudWatch event execution. In this case, we can set the cron through schedule_expression field to define when the lambda will run.

7. aws_cloudwatch_event_target is the resource responsible to trigger the Lambda using CloudWatch events.

8. aws_lambda_permission allows some executions from CloudWatch.

Packaging

Now you're familiar about Lambda and Terraform, let's packaging our project via Maven before Lambda creation. The idea is to create a jar file that will be used for Lambda executions and store at S3.

For this example, we're going to package locally. Remember that for an environment production we could use continuous integrations tool such Jenkins, Drone or even Github actions to automate this process.

First, open the terminal and be sure you're root project directory and running the following maven command:

mvn clean install -UThis command besides packaging the project, will download and install the dependencies declared on pom.xml file.

After running the above command, a jar file will be generated within target/ folder also created.

Running Terraform

Well, we're almost there. Now, let's provision our Lambda via Terraform. So let's run some Terraform commands. Inside terraform folder, run the following commands on terminal:

terraform initThe above command will initiate terraform, downloading terraform libraries and also validate the terraform files.

For the next command, let's run the plan command to check which resources will be created.

terraform plan -var-file=development/vars.tfvarsAfter running, you'll see similar logs on console:

Finally, we can apply to create the resources through the following command:

terraform apply -var-file=development/vars.tfvarsAfter running, you must confirm to perform actions , type "yes".

Now the provision has been completed!

Lambda Running

Go and access the AWS console to see the Lambda execution.

Access monitor tab

Access Logs tab inside Monitor section

See the messages below that will printed every 2 minutes

Destroy

AWS Billing charges will happen if you don't destroy these resources. So I recommend destroying them by avoiding some unnecessary charges. To avoid it, run the command below.

terraform destroy -var-file=development/vars.tfvarsRemember you need to confirm this operation, cool?

Conclusion

In this post, we created an AWS Lambda provisioned by Terraform. Lambda is an AWS service that we can use for different use cases bringing facility to compose an architecture of software.

We could note that Terraform brings flexibility creating resources for different cloud services and easy to implement in software projects.

Github repository

Books to study and read

If you want to learn more about and reach a high level of knowledge, I strongly recommend reading the following book(s):

AWS Cookbook is a practical guide containing 70 familiar recipes about AWS resources and how to solve different challenges. It's a well-written, easy-to-understand book covering key AWS services through practical examples. AWS or Amazon Web Services is the most widely used cloud service in the world today, if you want to understand more about the subject to be well positioned in the market, I strongly recommend the study.

Setup recommendations

If you have interesting to know what's my setup I've used to develop my tutorials, following:

Well that’s it, I hope you enjoyed it!

Comments